The AI-DePIN Convergence: How Shaga Bridges Compute, Data, and Demand

AI’s demand for distributed compute and DePIN’s need for intelligent coordination are converging into one market, where infrastructure, data, and intelligence operate on shared incentives. Shaga was built to bridge these layers through consumer gaming.

Key Points

• AI infrastructure faces major constraints: McKinsey reports only 19% of executives see revenue gains >5% from AI, while BCG shows 74% of companies struggle to scale AI value due to infrastructure bottlenecks. Distributed infrastructure demand is increasingly likely.

• DePIN specialists demonstrate that individual layers operate effectively at scale: Bittensor reported 128 active subnets as of September 2025, Theta Labs reported 80 PetaFLOPS of distributed GPU compute as of mid-2025, and IoTeX reported 1,600% growth in device wallet addresses as of September 2025.

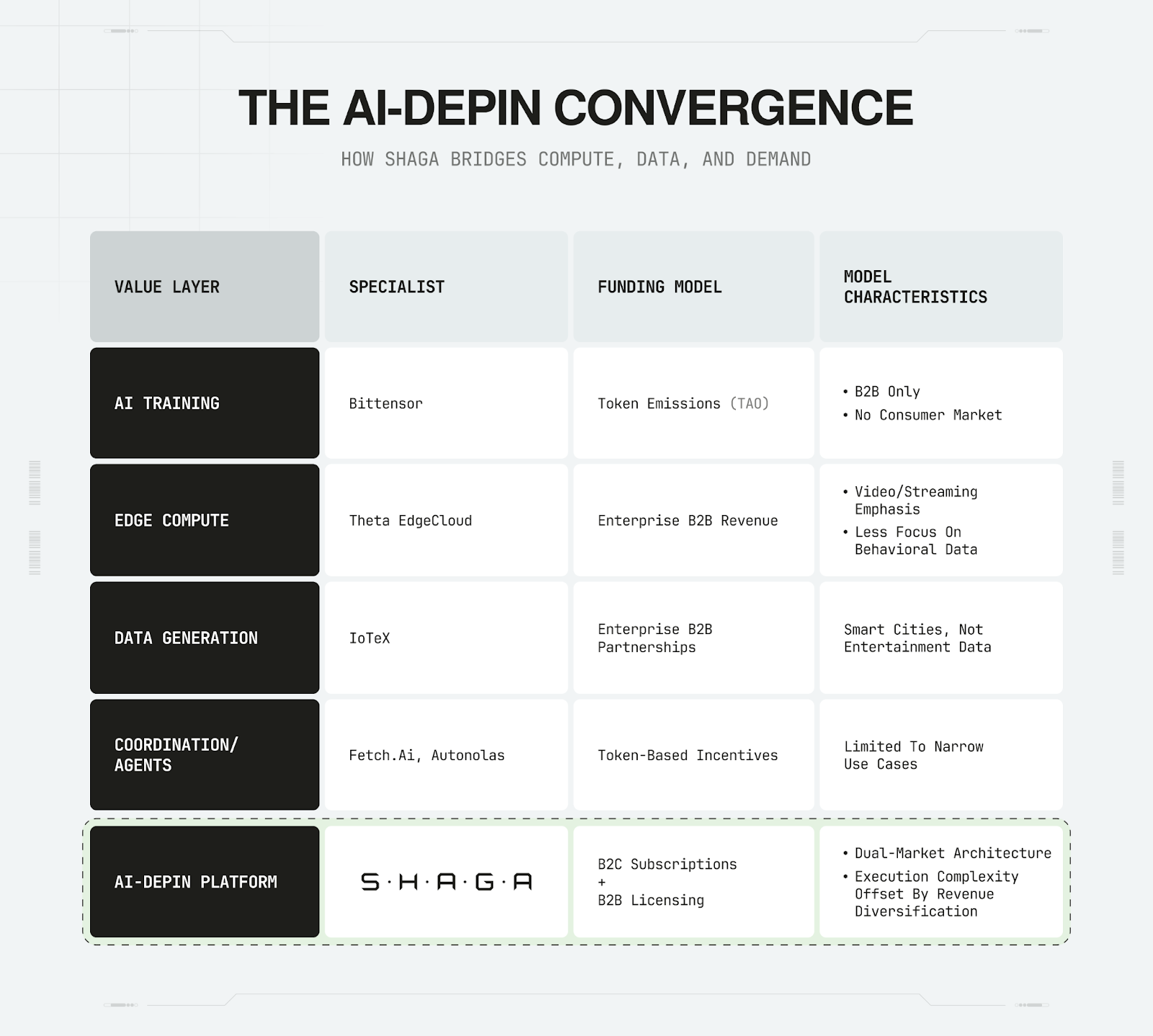

• Each excels at one layer—AI training, edge compute, or data generation—but few integrate the full stack with consumer-driven models that could extend toward enterprise applications.

• Shaga is designed to integrate all three layers plus gaming revenue: behavioral data generation, edge AI infrastructure, and intelligent coordination funded by consumer subscriptions.

The AI boom and the DePIN revolution seemed like separate stories until 2025. AI companies realized they need distributed infrastructure to scale. DePIN projects realized they need intelligent coordination to compete. The convergence has reached a tipping point, yet the market still treats AI and DePIN as separate sectors.

What makes AI-DePIN integration defensible? True integration creates compounding network effects: more compute enables better data capture, which improves AI coordination, which attracts more compute providers. All operating on shared networks with unified incentives.

2025 marks the inflection point: Shaga was architected to serve this synthesis from inception. This fusion is no longer theoretical; it represents a meaningful technological inflection point. As AI labs compete for scarce behavioral data and GPU access, Shaga enters with both already in motion: live infrastructure with telemetry collection and enterprise partnerships in development. This timing advantage, with operations live while several comparable integrations remain in testnet or limited release, defines Shaga’s developmental focus.

Why Infrastructure Limits Are Forcing AI Toward DePIN

AI companies are hitting the limits of centralized infrastructure. McKinsey reports only 19% of executives see AI boost revenues by more than 5%, and BCG shows 74% of companies struggle to scale and achieve value from AI investments due to multiple factors including data quality, talent, and governance—with infrastructure being a key bottleneck. Memory bandwidth limitations leave expensive GPU clusters underutilized. Grid interconnection delays of up to seven years in some regions further constrain scaling capacity.

Meanwhile, the DePIN market reached $30-50 billion in market capitalization by 2024-2025, proving that token incentives can bootstrap decentralized physical infrastructure at global scale. But pure infrastructure isn’t enough: DePIN networks now require AI-driven coordination to achieve efficiency comparable to centralized infrastructure.

These constraints don’t just create a gap: they define the landscape. Every limitation facing AI infrastructure amplifies the need for distributed systems like Shaga, where compute, data, and demand already align by design.

While these constraints push AI toward distributed solutions, several DePIN specialists are already proving that individual layers of this stack work at scale.

Specialists Proving Each Layer Works

Three projects demonstrate that AI + DePIN integration isn’t theoretical: it’s operational, with strong metrics and reported enterprise usage.

Bittensor operates decentralized AI training where miners provide infrastructure and validators coordinate intelligence through reported 128 active subnets as of September 2025, with TAO market capitalization of hundreds of millions. Bittensor demonstrates that distributed AI training with token incentives works at scale.

Theta EdgeCloud provides 80 PetaFLOPS of distributed GPU computing (reported by Theta Labs as of mid-2025) with reported 70% cost savings versus traditional cloud providers for AI inference workloads. The platform combines 30,000+ community edge nodes with cloud-based GPUs, with reported enterprise usage. Theta demonstrates that edge AI infrastructure can deliver enterprise-grade performance.

IoTeX launched its AI stack roadmap in July 2025, connecting IoT devices to AI training. The platform reported 1,600% growth in device owners (wallet addresses, September 2025) and 300% month-over-month increase in data requests, with announced enterprise partnerships. IoTeX demonstrates that physical devices can generate verified real-world data for AI training at scale.

Projects like Fetch.ai explore AI agents on blockchain networks, but none combines distributed AI training, edge compute infrastructure, and consumer entertainment data generation with sustainable revenue streams. The AI+DePIN stack remains fragmented across different platforms and business models.

Inside Shaga’s Compute-Data-Coordination Stack

Most specialists chose verticals: Bittensor picked AI training, Theta picked edge compute, IoTeX picked IoT data. But Shaga was designed as a cross-stack platform where compute, data, and coordination reinforce each other from inception. Coordination refers to software agents that route gaming sessions, manage data capture, and handle AI inference with on-chain rewards for operators.

Shaga integrates decentralized AI infrastructure with edge compute and behavioral data generation, bridging capabilities proven individually by projects like Bittensor, Theta, and IoTeX. Authenticated gameplay data is far scarcer than text or image datasets, the same high-signal behavioral data sought by emerging world models such as Google Genie.

The integration advantage: Shaga combines Bittensor’s decentralization, Theta’s edge compute infrastructure, and IoTeX’s data generation with consumer gaming demand that funds the entire stack. The funding flywheel is designed so that consumer revenue funds edge infrastructure, enabling more data capture and higher enterprise value, creating a loop that sustains both markets.

One session, two revenues: the same gameplay both delights users and produces authenticated datasets. All gameplay data collection is opt-in for node operators and designed to exclude player-identifiable information and align with enterprise compliance standards.

As established in our analysis of Shaga’s dual-revenue model (see: “Dual Revenue, One Network”), gaming subscriptions (B2C) enable enterprise AI data licensing (B2B), creating sustainable unit economics that specialists lack.

Why Legacy Models Can’t Retrofit Unified Architectures

To achieve sustainable AI+DePIN integration, platforms must have three critical components:

1. Consumer demand: Sustainable revenue stream to fund infrastructure development and maintain operations without relying primarily on token incentives.

2. Authenticated data capture: Technical and legal architecture for generating enterprise-compliant training data with proper consent frameworks and verification systems.

3. Edge inference capabilities: Low-latency compute infrastructure that can serve both consumer applications and AI workloads with quality-of-service guarantees.

Most specialists have one of these three. Bittensor (AI infrastructure, limited consumer market), Theta (edge compute, limited data generation), IoTeX (data capture, enterprise B2B focus). Shaga’s gaming platform enables all three: consumer gaming revenue, authenticated gameplay data capture, and edge infrastructure for gaming and AI inference.

The retrofit challenge is business model evolution: specialists would need to develop new market expertise, user bases, and technical capabilities outside their core competencies.

This integration isn’t hypothetical for Shaga: the stack is in operation with invite-only testing; data licensing is being developed with enterprise compliance. The gaming infrastructure already supports distributed compute; gameplay sessions already generate structured data. While specialists experiment with individual convergence pieces, Shaga combines all layers with consumer demand providing sustainable funding.

Shaga’s Position in the AI-DePIN Convergence: Why Now and How It Leads

As of 2025, Shaga is an early AI-DePIN platform advancing from concept to operation. The network combines distributed gaming infrastructure with authenticated gameplay data pipelines, introducing a dual-stream model (B2C live, B2B licensing in development). Most comparable networks still focus on individual layers such as compute, data, or coordination rather than unified integration.

Timing and differentiation: While projects such as Bittensor focus on decentralized AI training and Theta on edge compute, Shaga combines these capabilities with consumer-driven demand that supports infrastructure growth. Both monetization mechanics and compliance considerations are integrated from the start.

Execution status: The gaming infrastructure is live, system telemetry tested internally for stability, and data-capture frameworks are under development with consent-first and enterprise-grade compliance standards. Built on Solana’s DePIN architecture, the network is designed to utilize low transaction fees for node-level operations and sub-second finality for real-time coordination. Scaling both consumer and enterprise functions in parallel introduces complexity but supports long-term sustainability, as user activity helps offset infrastructure costs.

Category outlook: As AI and DePIN continue to merge into a unified value chain, combining compute, data, and coordination, platforms that integrate these layers from inception are likely to shape the next phase of industry development. Shaga’s approach centers on authenticated gameplay telemetry, a scarce and privacy-aligned data source that supports new ways of connecting consumer engagement with distributed infrastructure, aligning the network with the next stage of AI-DePIN evolution.